Finance

FCC proposes making AI-generated robocalls illegal over election bad actors

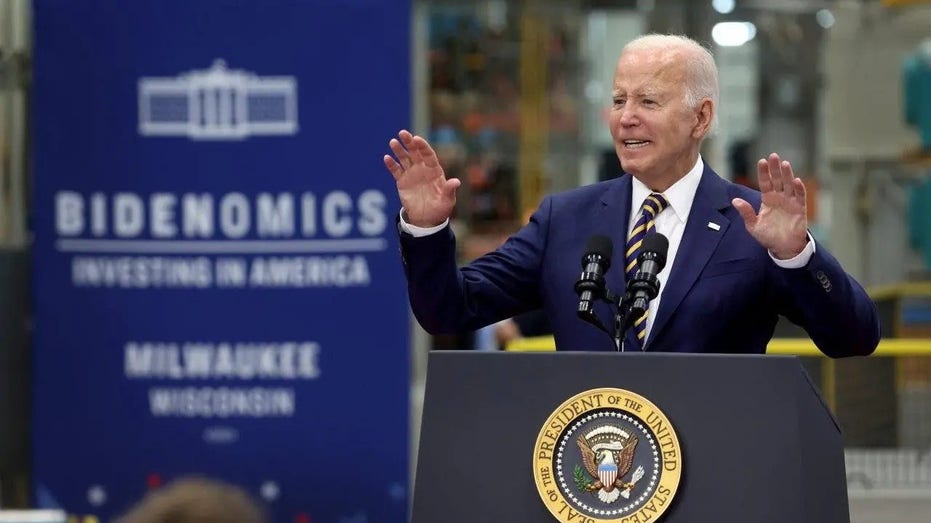

The Federal Communications Commission (FCC) is taking action to crack down on robocalls that use artificial intelligence-generated voices after a fake voice that sounded like President Biden was used in calls to voters ahead of the New Hampshire primary election.

The federal agency issued a proposal Wednesday to officially recognize AI-generated voices as “artificial” under the Telephone Consumer Protection Act (TCPA), which would make it illegal to utilize voice-cloning technology in calls to consumers.

“No matter what celebrity or politician you favor, or what your relationship is with your kin when they call for help, it is possible we could all be a target of these faked calls,” FCC Chairwoman Jessica Rosenworcel said in a statement. “That’s why the FCC is taking steps to recognize this emerging technology as illegal under existing law, giving our partners at State Attorneys General offices across the country new tools they can use to crack down on these scams and protect consumers.”

DEAN PHILLIPS VOWS TO BE ‘FIRST AI PRESIDENT’ IN CAMPAIGN SPEECH ON HIS ARTIFICIAL INTELLIGENCE PLATFORM

The FCC said the attorneys general of 26 states are already on board with the proposal to criminalize AI robocalls under the TCPA – which it first floated in November – and the agency currently has a memorandum of understanding with 48 states to work together to combat robocalls.

The agency’s move comes as the New Hampshire Attorney General’s Office is investigating the source of a robocall that went out to residents of the state last month. The call used a fake voice of Biden urging voters there not to participate in the presidential primary and instead “save” their votes for the November general election.

VOTERS FACE ‘SIGNIFICANT THREAT’ FROM WAVE OF AI-GENERATED FRAUD AS EXPERTS RACE TO STOP ELECTION INTERFERENCE

Years ago, spoof calls and spam calls were typically generated based on a combination of statistical methods and audio dubbing and clipping. Since public figures, such as Biden, are on record with a voice saying many words known to the public, the use of powerful new AI tools can produce audio that is difficult to differentiate from authentic recordings.

“The rise of these types of calls has escalated during the last few years as this technology now has the potential to confuse consumers with misinformation by imitating the voices of celebrities, political candidates, and close family members,” the FCC said in a news release.

While federal laws prosecute knowing attempts to limit people’s ability to vote or sway their voting decisions, regulation on deceptively using AI is still yet to be implemented.

FOX News Digital’s Greg Norman and Nikolas Lanum contributed to this report.

Read the full article here