Finance

CEO backs Gov. Newsom’s ‘unusual’ executive order to study AI’s risk and uses: ‘This is good policy’

In California’s latest push to regulate artificial intelligence, Gov. Gavin Newsom has signed an executive order to study the uses and risks of the technology.

One expert in the field, C3.ai CEO Thomas Siebel, criticized politicians’ resistance to A.I., arguing that a lot of new policies are “just kind of crazy, written by people who have no understanding of what they’re talking about.”

GENERATIONAL DIVIDES RUN DEEP IN USE OF AI TOOLS, SALESFORCE RESEARCH FINDS

On the other hand, Siebel held great praise for California’s A.I public policy proposal, deeming it as a “cogent, thoughtful, concise, productive and really extraordinarily positive public policy.”

“This executive order, N-1223, that was signed by Governor Newsom, this is really unusual as it relates to a public policy proposal and as it relates to applying and exploring generative A.I.,” Siebel began.

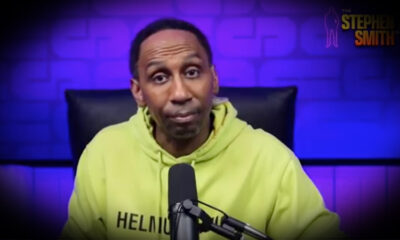

“I would describe this document as cogent, thoughtful, concise, productive and really extraordinarily positive public policy where they propose to get it redouble efforts in a big way to understand the risks and the benefits of generative A.I. as it relates to the people of California and the systems in California, and to find ways to deploy these technologies to the benefit of the people of California,” Siebel explained, during an appearance on “Cavuto: Coast to Coast.”

Substitute host David Asman responded to Siebel’s take, saying that he was “a little shocked” to see a company CEO “heap praise” onto the people who could be regulating his company in the future.

FORMER LABOR SECRETARY WARNS AMERICANS ARE GOING TO BE WORKING FOR AI PLATFORMS: ‘EXTREMELY DESTABILIZING’

Siebel responded, saying “if you read the document carefully, there is no regulation on A.I. companies.”

“There is no proposal for regulation here. This is a proposal to study the benefits and risks of generative A.I. and figure out how to apply generative A.I. in the state of California to the benefit of the people,” Siebel continued.

“This could be flood mitigation, wildfire mitigation, safe power generation and distribution, fraud avoidance, what have you, to how you avoid the risks associated with privacy and what have you, and how to apply these technologies to the benefit of this is of the people. This is good policy.”

AI MEDICAL CAPABILITIES SHOW ACCURACY IN CLINICAL DECISION MAKING

David Asman subsequently asked the company CEO if privacy is the biggest risk of A.I., to which Siebel firmly responded, “Oh there are risks that are bigger than privacy.”

“The privacy issues are daunting, but A.I will be used to ration health care. A.I. will be used for social media scoring. To evaluate who can fly, who can get insurance, who can vote, and who can go to college,” Siebel explained. “You know, they do this in China today. They will do this in the United States. So there’s a lot of, very, very detrimental attributes to the application of A.I. and generative A.I.”

“This,” he said of Newsom’s initiative, “is a cogent proposal to understand them and avoid those risks,” Siebel concluded.

Read the full article here